In today's digital landscape, online activities have given rise to essential data generated through user interactions. Clickstream data, which records the sequence of web pages a user visits, provides crucial information that can affect how we adjust and improve our marketing strategies.

However, the presence of bots has harmed clickstream data, often distorting its accuracy and reliability. Understanding the influence of bots on clickstream data is essential for businesses and brands to lessen their negative impact and make informed decisions based on accurate and reliable data.

So, let's learn more about why you should identify bots that visit your sites and how to differentiate them from human clicks.

Bot Clicks—Why We Hate Them

While bots may seem like harmless little visitors that hop into your site occasionally, they can affect far more than just views. Here are a few ways bots can seriously jeopardize your brand and wallet.

1. Higher Cost

If you've invested in advertising agencies, bots can give you quite a headache. There are three common ways bots can drain your funds through agencies: click fraud, inflated impressions, and false conversions.

Bots designed for click fraud can generate fake ad clicks, artificially inflating the number of interactions and clicks on ads. Some advertisers charge for these fraudulent clicks, wasting ad spend without genuine user engagement.

The higher the click fraud rate, the more money businesses lose on ineffective advertising.

Bots can also inflate the number of ad impressions by repeatedly loading web pages or refreshing content. This leads to an overestimation of the reach and exposure of ad campaigns. Advertisers charge for impressions that do not reach genuine users, wasting advertising dollars on fake views.

Conversion in form submissions, sign-ups, or purchases is also prey to bots. This can mislead brand owners into believing their campaigns drive actual conversions and revenue.

However, these false conversions do not contribute to genuine business outcomes, leading to an inaccurate assessment of the advertising campaign's effectiveness and potentially driving up costs for future campaigns.

2. Inaccurate Data

Money is not the only thing compromised when bots run askew on your site. As the previous points have mentioned, inaccurate data are misrepresented.

Several brand owners make small and significant decisions based on their site's metrics. While a single, small decision may not directly affect a brand, several small decisions or a big one made based on inaccurate data can lead to catastrophic results.

Brands rely on accurate data to make informed decisions about their marketing strategies, product development, customer experience, and resource allocation. Inaccurate data can mislead decision-makers, leading to misguided actions and ineffective strategies.

Inaccurate data can also lead to a lack of understanding of customer preferences, behaviors, and needs. This hinders brands from delivering personalized and relevant experiences, causing customer frustration and dissatisfaction. Negative experiences can damage both your brand reputation and customer loyalty.

3. Bad Traffic

Bots significantly impact server resources, like bandwidth and processing power. This impact poses challenges to website infrastructure, ultimately leading to performance issues. Bots operate at a large scale, generating several requests that servers must handle over the network.

When a considerable portion of clicks originates from bots, it strains server resources excessively. Bots consume CPU cycles, memory, and storage space, which reduces the availability of these resources for legitimate user requests.

Consequently, servers can become overloaded, resulting in slower response times, increased delays, and potential service disruptions.

Bandwidth is another crucial resource that bots consume. Each bot request requires data transfer, taking up a portion of the available bandwidth. When a significant proportion of clicks comes from bots, it can saturate the bandwidth, limiting its capacity to handle genuine user traffic.

As a result, users may experience slower page loading, longer file download times, and an overall degraded user experience.

Furthermore, bots' automated activities, such as scraping, crawling, or repetitive tasks, impact the processing power of servers. These activities monopolize processing resources, hindering the server's ability to handle other essential tasks and diminishing overall performance.

4. Poor Security

There are four ways in which bots compromise a site's security: spamming and phishing, content scraping and intellectual property theft, unauthorized access attempts, and SEO manipulation.

Bots can distribute spam emails, comments, or messages across a website. These unsolicited messages can contain malicious links or phishing attempts, tricking users into sharing sensitive information or installing malware.

Such activities undermine user trust and can lead to financial losses and reputational damage for businesses.

Bots can also be programmed to scrape website content, including copyrighted material, product listings, pricing information, or proprietary data. Intellectual property theft can harm businesses by enabling competitors to replicate their offerings, affecting market share and revenue.

The third way bots compromise a website's security is by unauthorized access attempts. Bots can continuously probe a website for weaknesses, attempting to gain unauthorized access to sensitive information or exploit security loopholes.

They may use brute-force attacks, credential stuffing, or other automated techniques to bypass authentication systems and gain entry. These malicious activities can lead to data breaches, unauthorized account access, or the compromise of sensitive user information.

Finally, bots can use black-hat SEO techniques to manipulate search engine rankings, such as generating spammy backlinks or keyword stuffing. This can result in a website being penalized or even delisted by search engines, leading to reduced visibility and traffic.

How to Differentiate Bots from Humans with Short.io

Bot continues to be a growing problem for business websites. The first step to counteracting this problem is to identify them. And while dealing with them may be challenging, identifying them can be a piece of cake if you have the right service.

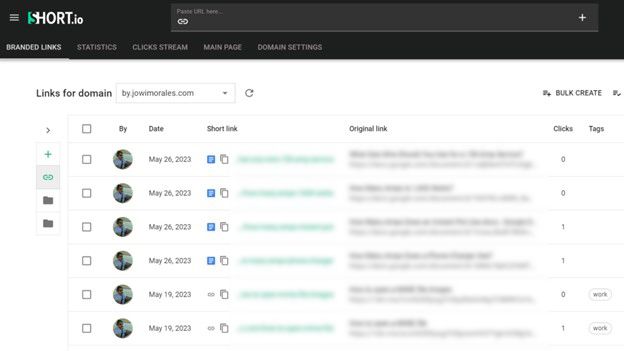

We mainly use Short.io to identify whether views are from humans or bots. With Short.io, the process is straightforward. It only takes x steps. Here's how you can do it.

- Log into your Short.io account.

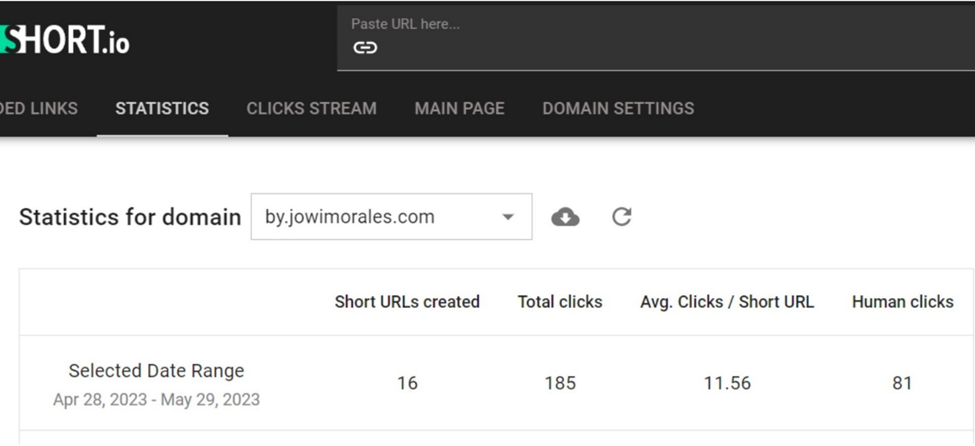

- In your Dashboard, click Statistics.

- On the upper portion of the statistics, you should be able to see Human Clicks and Total Clicks.

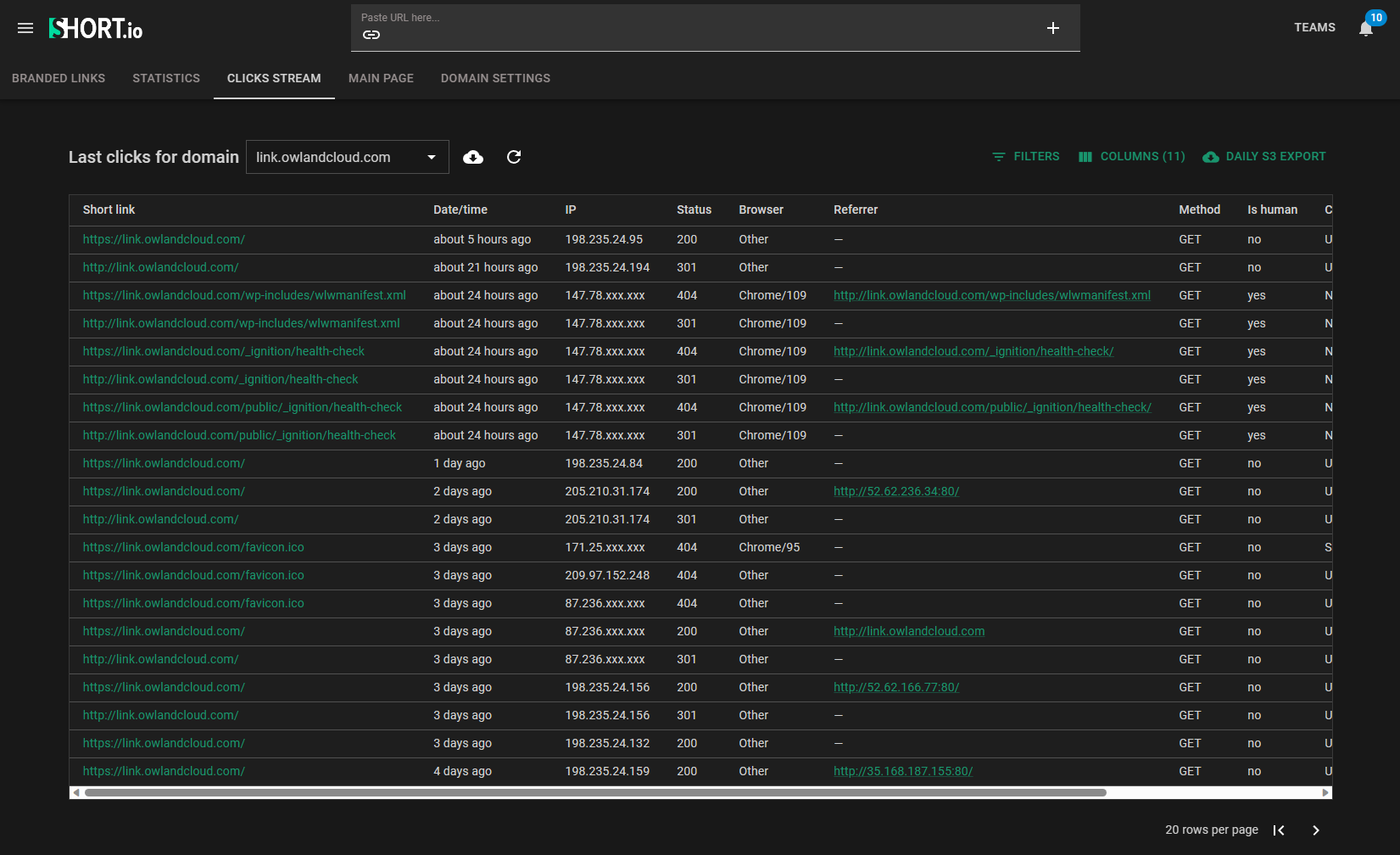

You can also see the clicks you're getting live via the Clicks Stream. Here's how:

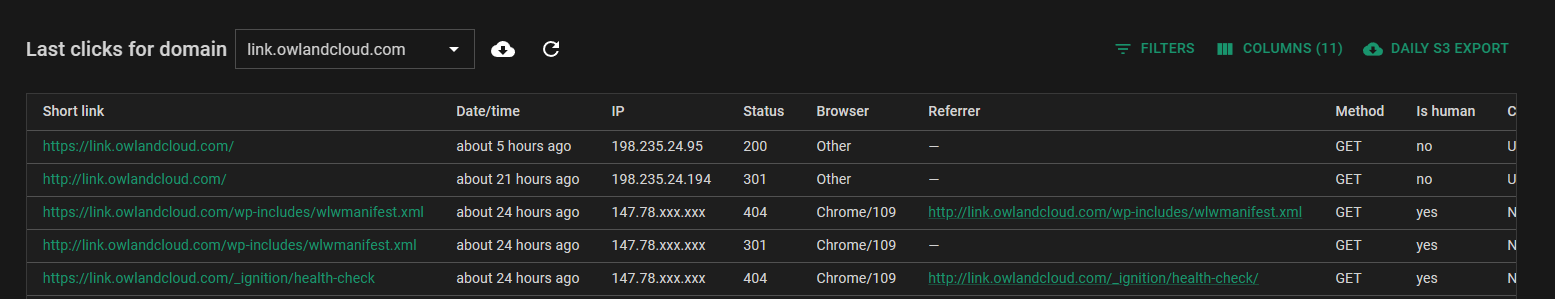

- On your Short.io Dashboard, click Clicks Stream.

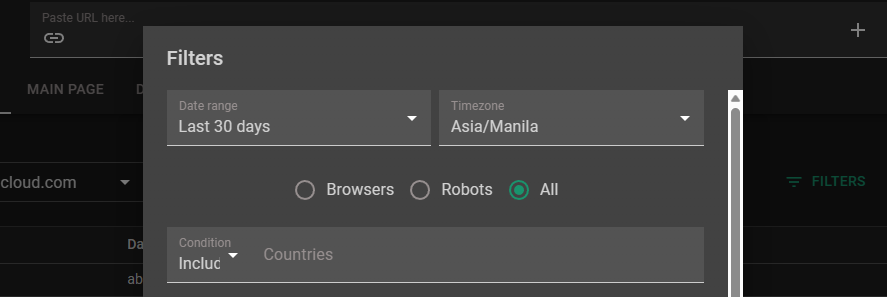

- Once you're on the Click Stream view, click on Filters.

- The filter window should show you several options. Near the top of the options, click the Browsers radio button. This will remove all bot clicks from your Clicks Stream, allowing you to see legitimate visits to your short links.

And with these steps, you can tell just how much engagement you're getting. You can identify whether your site is plagued by bots or not.

Short.io Gives You Accurate Data

While bots wreak havoc on your site and feed you false data, Short.io was designed to do the opposite. Short.io is a versatile link management platform that helps businesses create, customize, and track short links, improving user experience, data accuracy, and security.