You can prevent your short link's appearance in Google Search results by managing a short domain's settings. Short.io provides access to editing Search engine policy that in turn modifies robot.txt file.

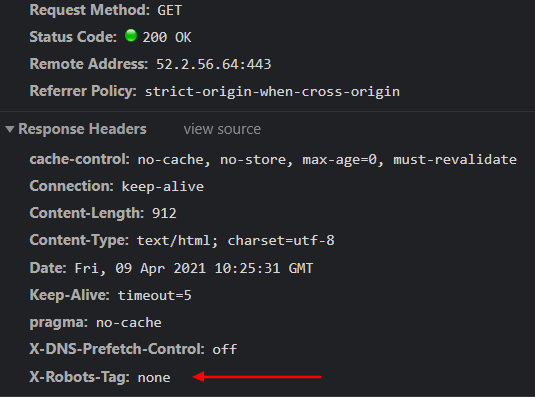

To completely prevent short links from appearing in Google Search results, we need to send "X-Robots-Tag: none" in HTTPS headers. When Googlebot next crawls that page and sees the tag or header, it will drop the page entirely from Google Search results, regardless of whether other sites link to it.

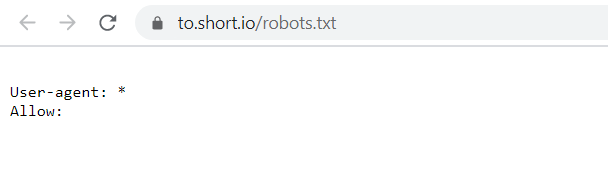

Reaching this behavior is possible via choosing "Don't index short links" in the short domain settings. When checking the robot.txt file, you will see that it contains the "Allow" string, which is correct.

For the noindex directive to be effective, the page must not be blocked by a robots.txt file, and it has to be otherwise accessible to the crawler. If the page is blocked by a robots.txt file or it can't access the page, the crawler will never see the noindex directive, and the page can still appear in search results if, for example, other pages link to it.

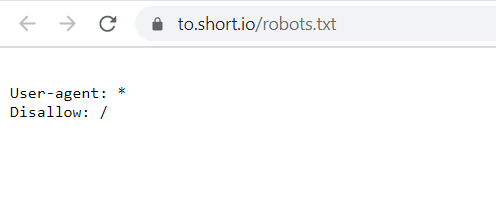

If you choose "Don't follow," we update robots.txt to disallow mode and prevent crawlers from visiting URLs and seeing the directive. Please note that Google can index your links if other sites link to them in this case.

How to Block Link Indexing

DiscoverThe article is about:

- how to prevent link indexing

- how to stop link indexing

- how to ignore short links on google results

Read also: